Introduction

AI-powered coding tools are transforming how engineers work, from writing code faster to reducing boilerplate. But here’s the truth: when production issues hit, or when you need to design systems that scale and perform reliably, no AI tool can replace deep technical fundamentals. These skills separate average coders from true engineers.

Here’s a breakdown of the key areas every software engineer should master today:

1. Redis: High-Speed Data Access

AI tools can autocomplete your queries, but when you need blazing-fast data retrieval or to handle sudden traffic spikes, Redis expertise is invaluable. Learn its data structures, caching patterns, and persistence models.

2. Docker & Kubernetes: Building and Scaling

Code completion doesn’t deploy containers. Knowing how to package applications with Docker and orchestrate them with Kubernetes ensures your code is ready for the real world—build, ship, and scale seamlessly.

3. Message Queues (Kafka, RabbitMQ, SQS): Decoupling and Resilience

Distributed systems thrive on loose coupling. Message queues handle spikes, failures, and asynchronous workflows. AI won’t tell you why messages vanished at 3 AM, but hands-on queueing experience will.

4. ElasticSearch: Beyond Keyword Search

Search and analytics aren’t solved by simple LIKE queries. ElasticSearch helps you build full-text search, log analysis, and real-time dashboards at scale.

5. WebSockets: Real-Time Systems

Chats, games, trading dashboards—real-time needs a robust communication layer. WebSockets enable bi-directional, low-latency connections. Learning to design and maintain fault-tolerant channels is essential.

6. Distributed Tracing: Navigating Microservices

With dozens of services talking to each other, tracing gives visibility. Tools like Jaeger or Zipkin show exactly where failures occur. AI might say “trace it,” but you need to know how to set it up effectively.

7. Logging & Monitoring: Your Early Warning System

Logs are your forensic evidence when things go wrong. Combine structured logging with monitoring solutions (Prometheus, Grafana) to spot problems before users do.

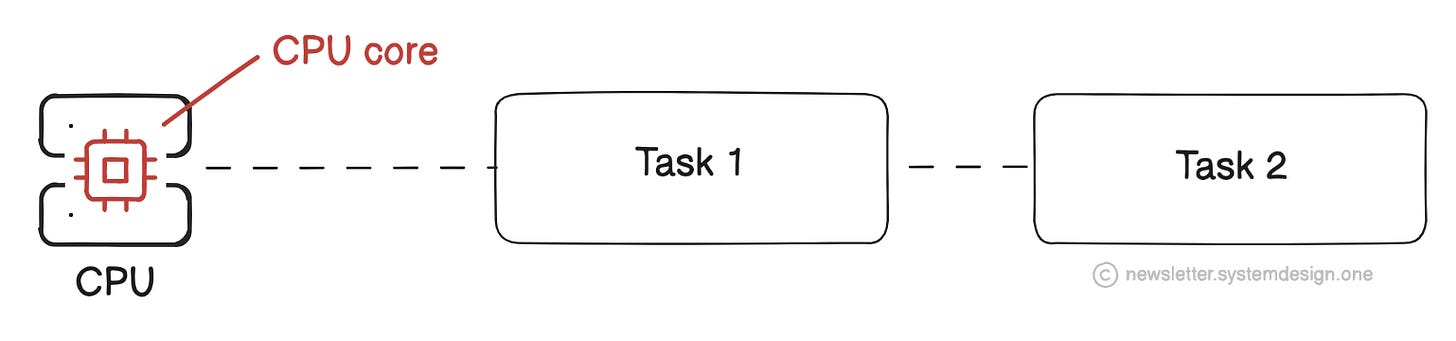

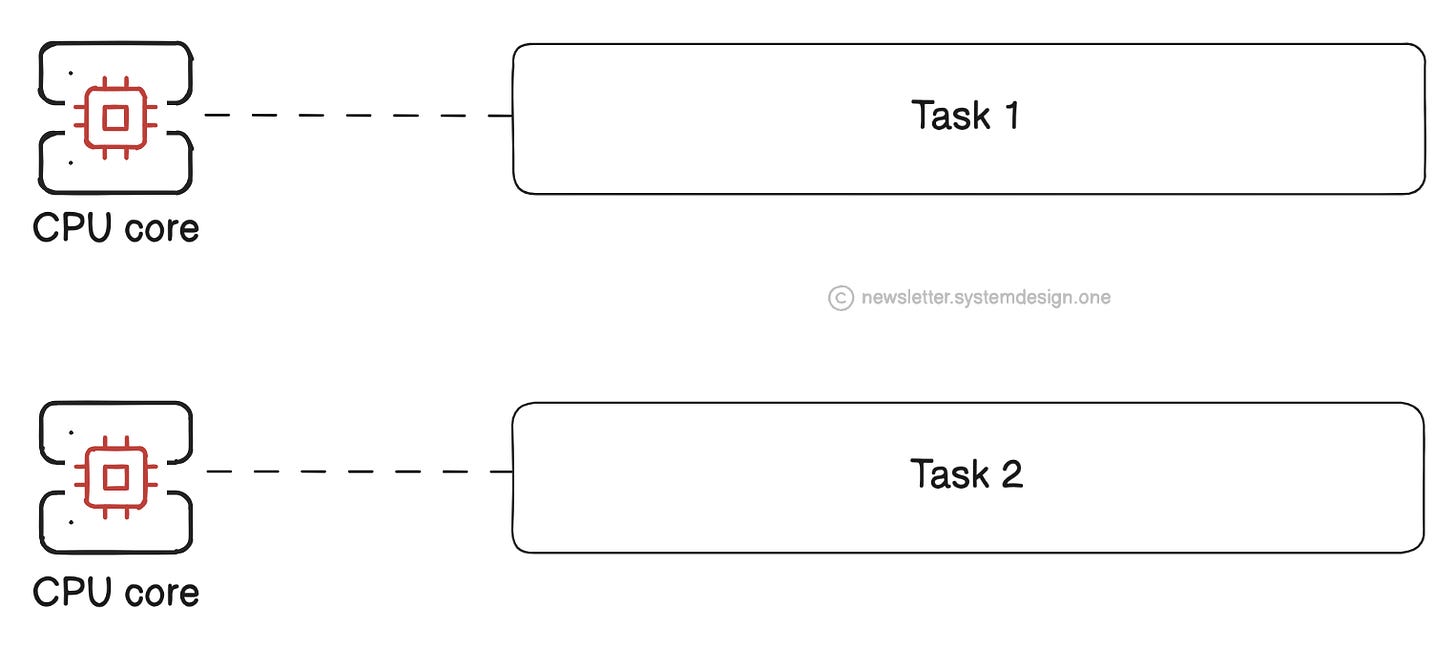

8. Concurrency & Race Conditions: Taming the Beast

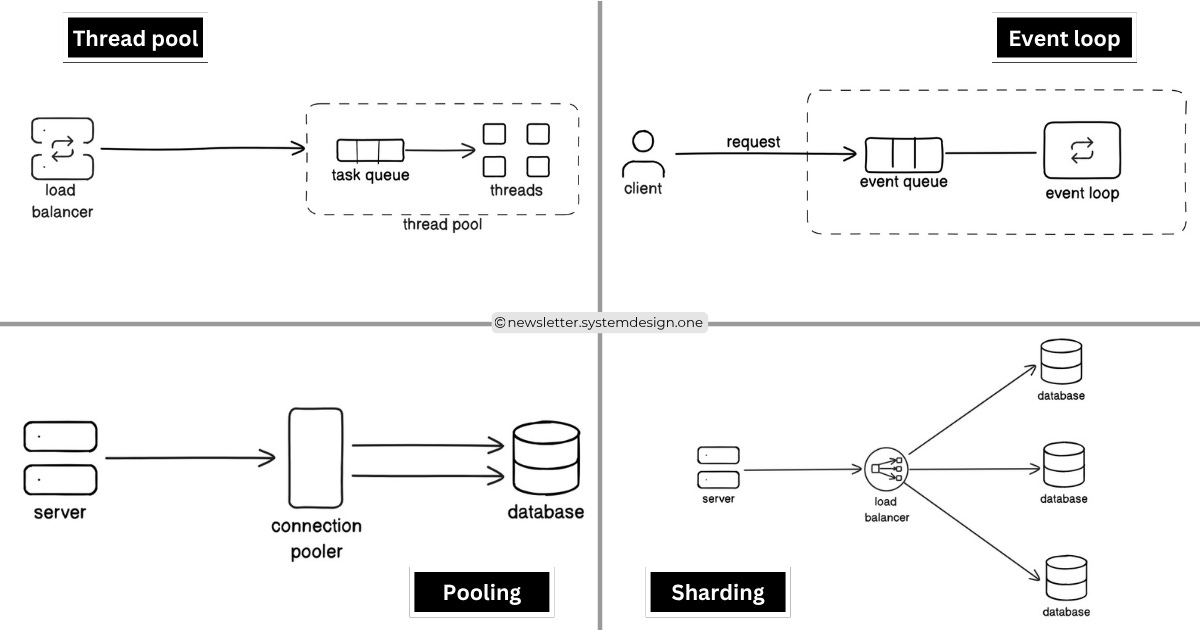

Async code and multithreading introduce subtle bugs. Understanding locks, semaphores, and safe concurrent patterns will save countless hours of debugging.

9. Load Balancers & Circuit Breakers: Staying Up Under Stress

When services fail or traffic surges, load balancers and fault-tolerant patterns keep your systems alive. Knowing when and how to implement them is critical.

10. API Gateways & Rate Limiting: Protecting Your Services

Public APIs are magnets for abuse. Gateways, throttling, and quota enforcement prevent downtime, overuse, and security holes.

11. SQL vs NoSQL: The Right Tool for the Job

Every database has strengths and trade-offs. Learn to evaluate schema design, consistency, and performance needs before picking SQL or NoSQL.

12. CAP Theorem & Consistency Models: Thinking Distributed

Trade-offs between consistency, availability, and partition tolerance define distributed systems. Understanding these principles makes you a system designer, not just a coder.

13. CDN & Edge Computing: Speeding Up Globally

Global users demand fast response times. CDNs and edge networks push content closer to users, reducing latency and improving reliability.

14. Security Basics: Building Trust

OAuth, JWT, encryption—these aren’t optional. A single security misstep can undo years of work. Learn to integrate security at every layer.

15. CI/CD & Git: Automating Quality

AI might generate code, but you still need robust pipelines for testing, deployments, and rollbacks. Master Git workflows and CI/CD tools for seamless releases.

Conclusion

AI will make you faster, but fundamentals make you effective. Write scripts, break systems, monitor failures, and learn by doing. These hands-on skills are what make you stand out—not just as someone who writes code, but as an engineer who builds resilient, scalable systems.